Jake Freiberg

User Experience

Researching | Designing | Prototyping

Hi, I'm Jake.

I'm a researcher, designer, and prototyper.

Simply put, my passion lies in making interfaces that give people the power to do more, better.

Whether it's physical, digital, or mental structures, I'm forever drawn towards improving things.

I also love to grow plants, play table tennis, and craft wood, metal, or synthetics into useful and/or pretty things.

| Year | 2014-2015 |

|---|---|

| Roles | Researcher, Designer, Developer, Fabricator |

| Collaborators | Bernhard Riecke, Alex Kitson, Tim Grechkin, and Yehia Madkour |

| Clients | Simon Fraser University, Perkins + Will |

| Tools | Unity, Revit, 3DSMax, C#, TrackIR, Oculus Rift DK2, Laser Cutting |

| Research Methods | Sequential Mixed Methods, Interviews, Focus Groups, Brainsketching, User Scenarios, Mixed Between-Within Experimental Design, |

| Supplemental Resources | MSc Thesis, Thesis Slides, Thesis Presentation |

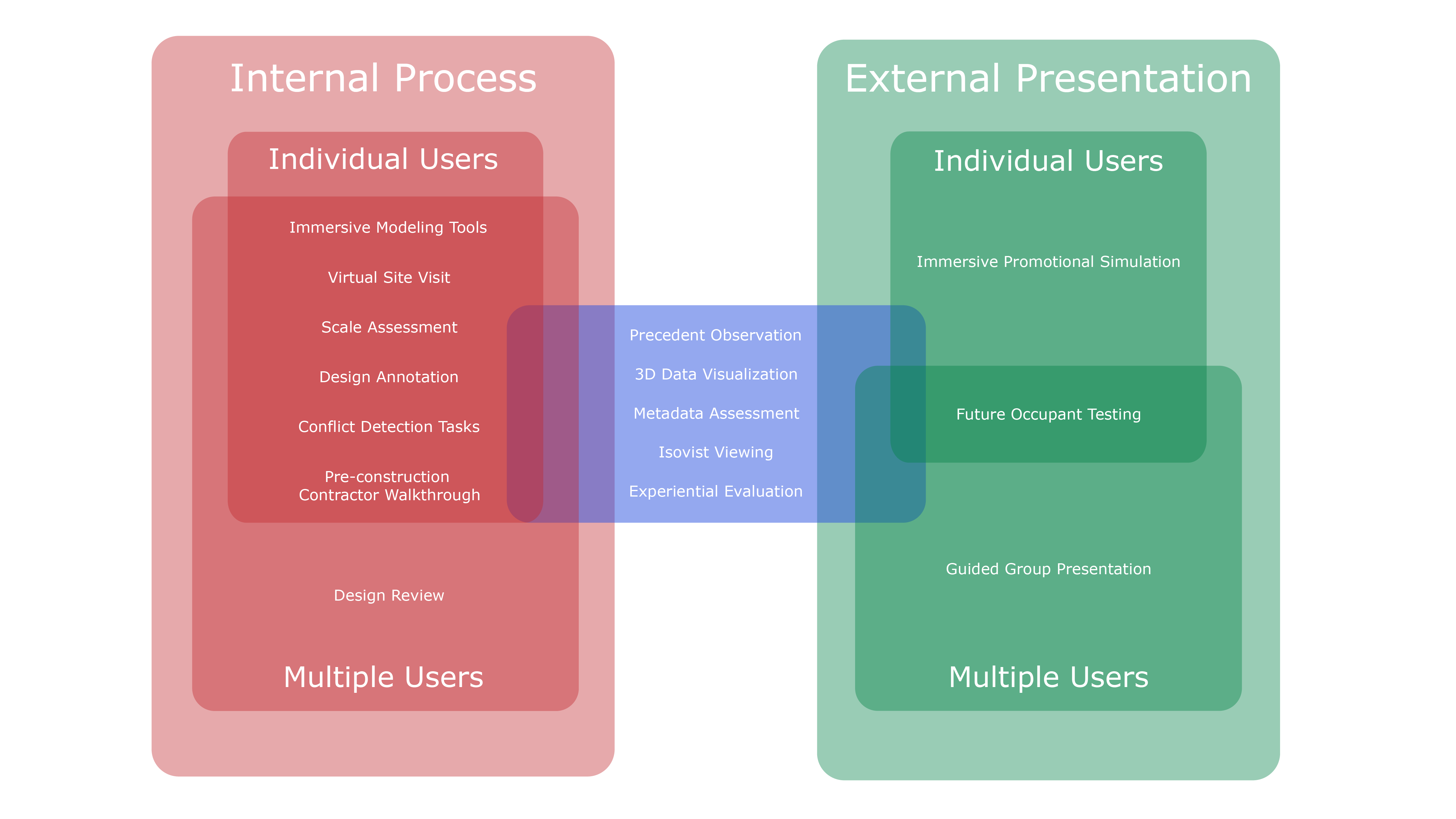

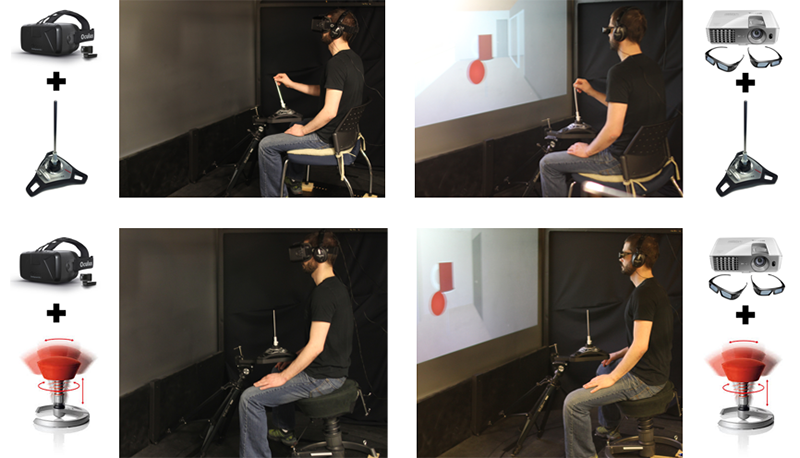

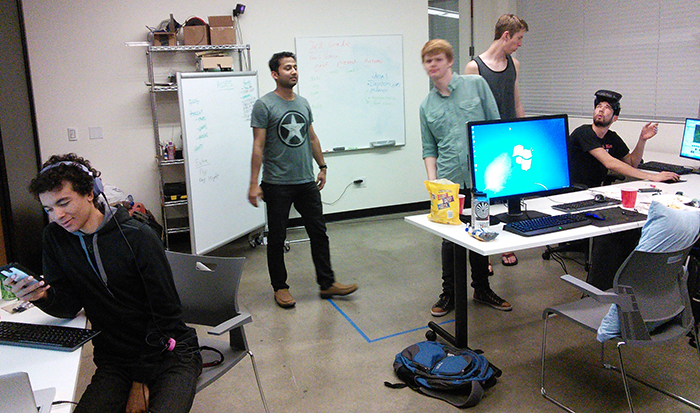

This project consisted of a 3 phase mixed methods research project with Perkins + Will during their design of an SFU Student Union Building. Our goal was to create and evaluate an interface for use in architectural design scenarios.

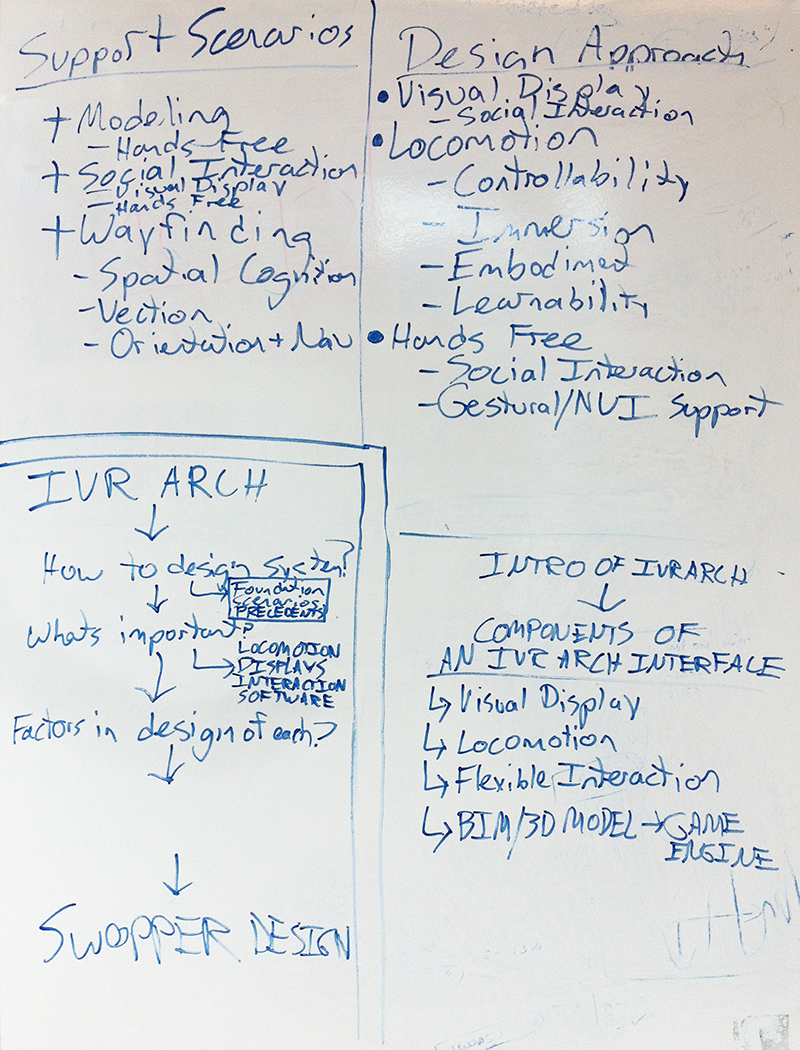

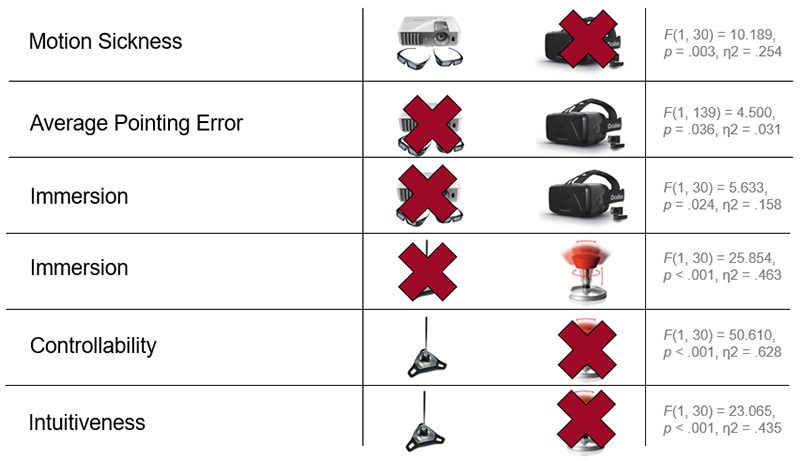

1) Exploration: Ran qualitative interviews and focus groups to gather design requirements, outlined VR usage scenarios for architectural design process.

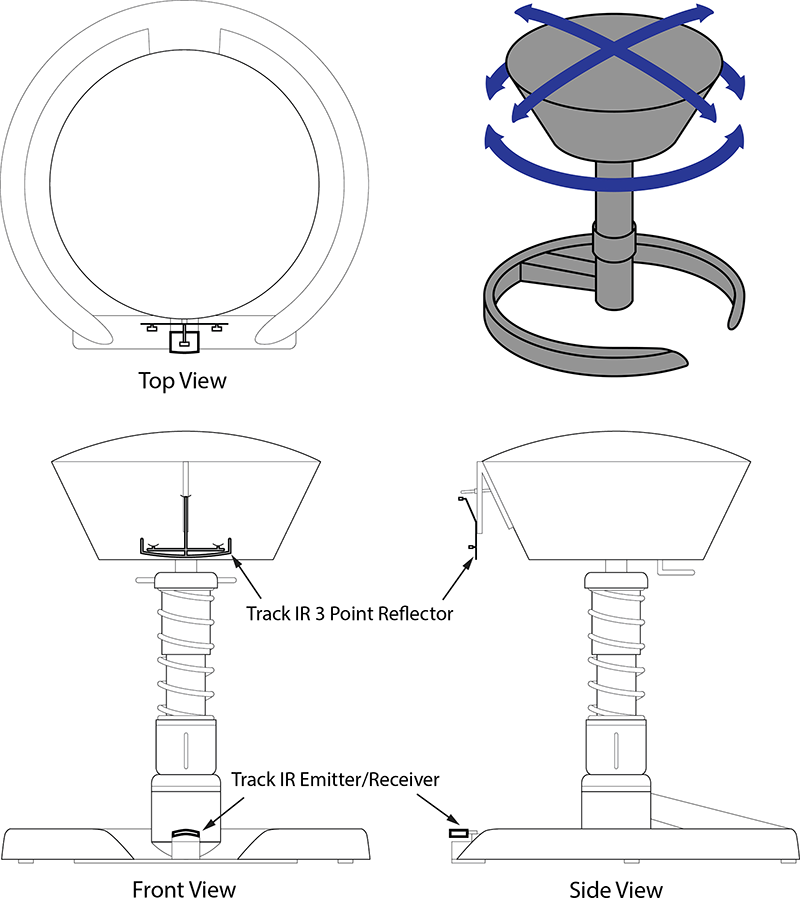

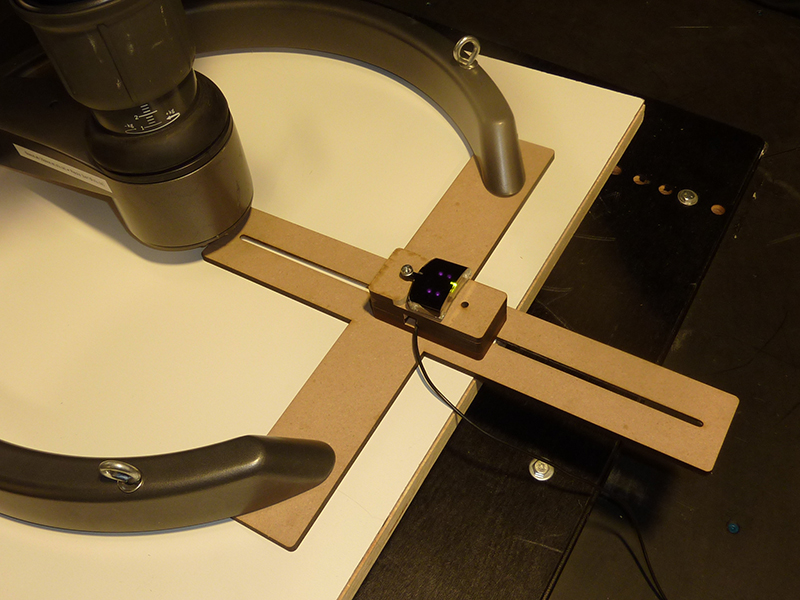

2) Design & Develop: Designed, constructed, and programmed a VR locomotion interface and Revit to Unity model workflow for client presentations and internal review sessions based on requirements gathered in phase 1.

3) Evaluation: Conducted quantitative evaluation of the interface using a mixed between-within experimental design assessing the requirements from phase 1.

| Year | 2016 |

|---|---|

| Roles | UX Designer |

| Collaborators | Evan Aubrey, Masashi Sato, Joey Koblitz, Anindya Dey, and Jeffrey Atwood |

| Client | Foundry10 Educational VR Hackathon |

| Tools | Unity, Blender, C#, HTC Vive |

| Awards | Best use of VR in Education at Foundry10 Educational VR Hackathon 2016 |

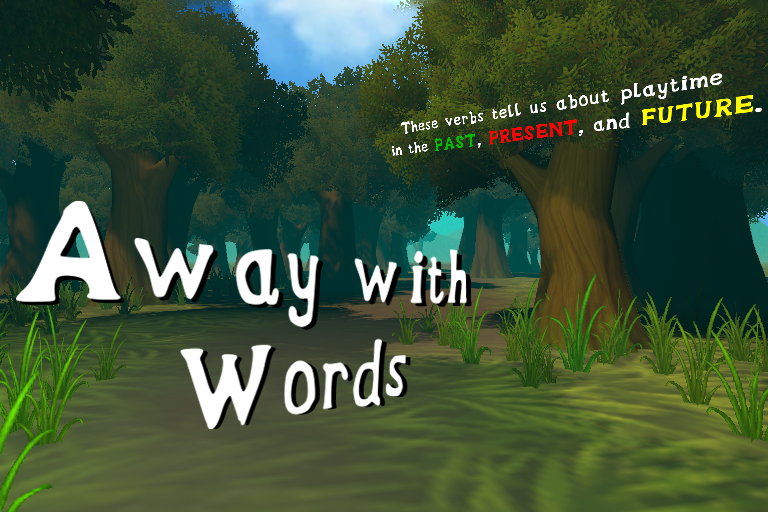

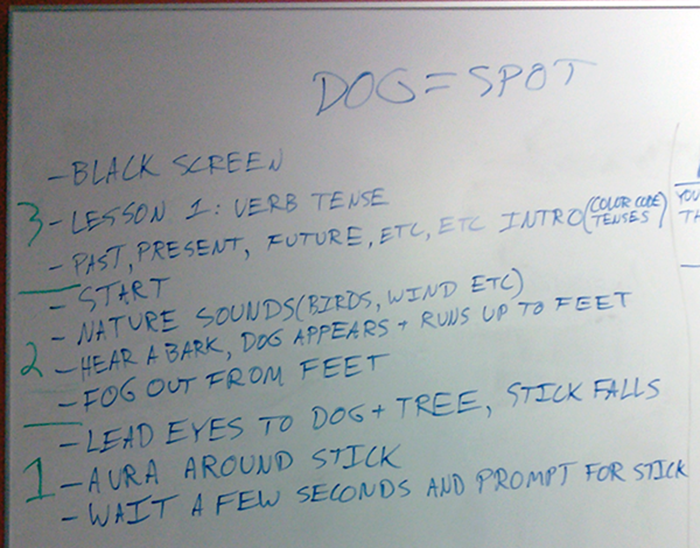

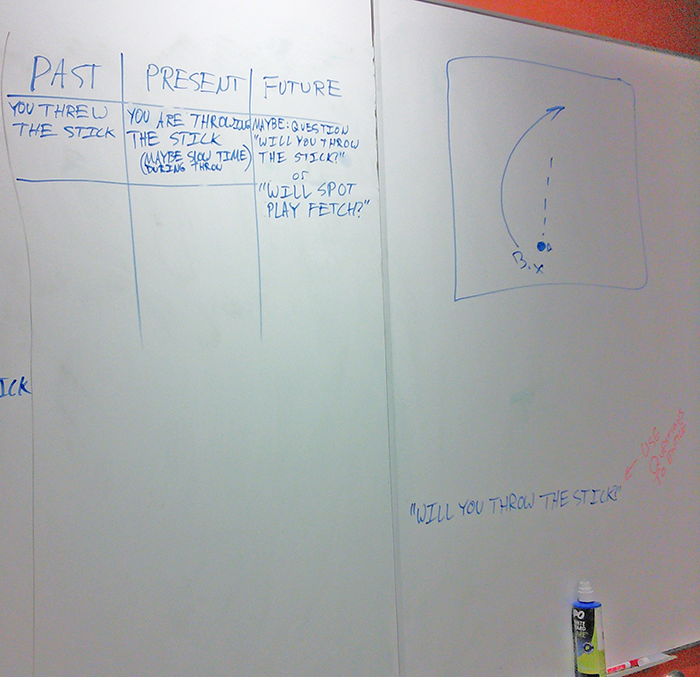

Over the course of two days we designed and built an educational VR app in Unity to help elementary students learn verb tenses in an immersive story based environment. We won Best Use of VR Tech for Education at the 2016 Foundry10 Educational VR Hackathon with this project.

| Year | 2015 |

|---|---|

| Roles | Project Initiator, Expedition Co-organizer |

| Collaborators | Reese Muntean |

| Client | Matterport |

| Tools | Matterport 3D Scanning Camera |

| Recognition | Featured in Techcrunch |

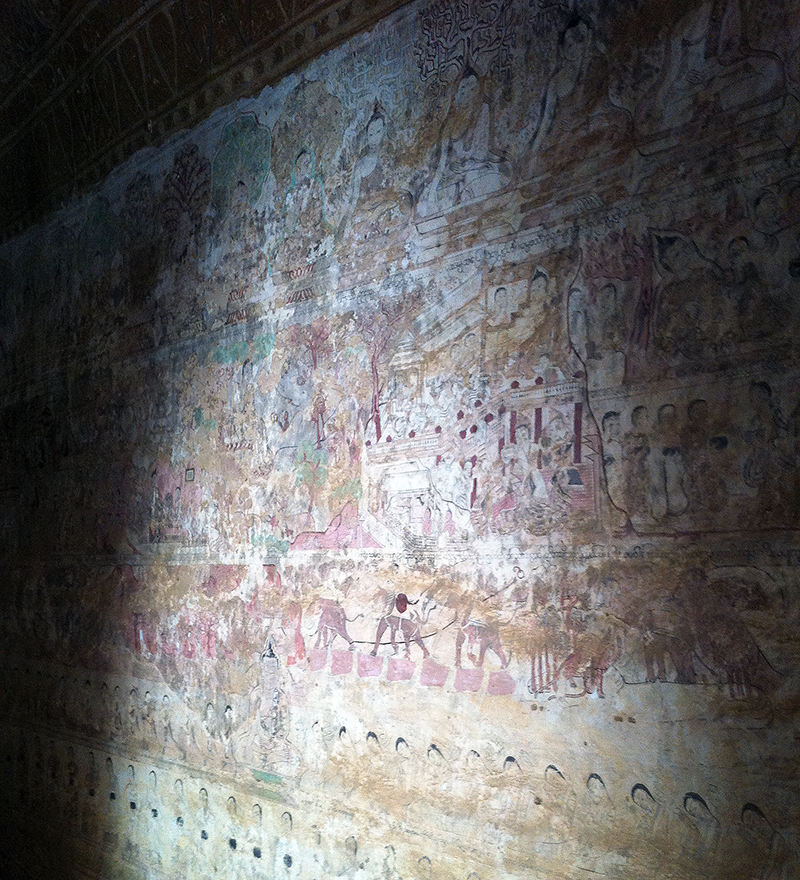

Intrigued by the prospect of 3D scanning spaces, and potential for documenting endangered architectural masterpieces, I made contact with Matterport to propose a collaboration. After proposing the benefits of using their 3D scanning camera to promote content gathering, I organized and planned a trip to Myanmar and Cambodia to gather 3D scans of Buddhist and Hindu temples in Bagan and Angkor Wat. My good friend Reese Muntean joined in the expedition, and her knowledge of photography and international travel was invaluable during the trek. The scans were among the top viewed and were key in securing a 30 million dollar investment (see TechCrunch promotional video). The scans were also one of the first models used in Matterport’s pilot program of their VR app.

| Year | 2016-2017 |

|---|---|

| Roles | Designer, Developer, and Fabricator |

| Collaborators | Bernhard Riecke and Yehia Madkour |

| Client | Perkins + Will |

| Tools | Unity, Unreal, 3dsMax, Revit, C#, Vjoy, 3D Printing, Laser Cutting |

| Supplemental Resources | Perkins + Will |

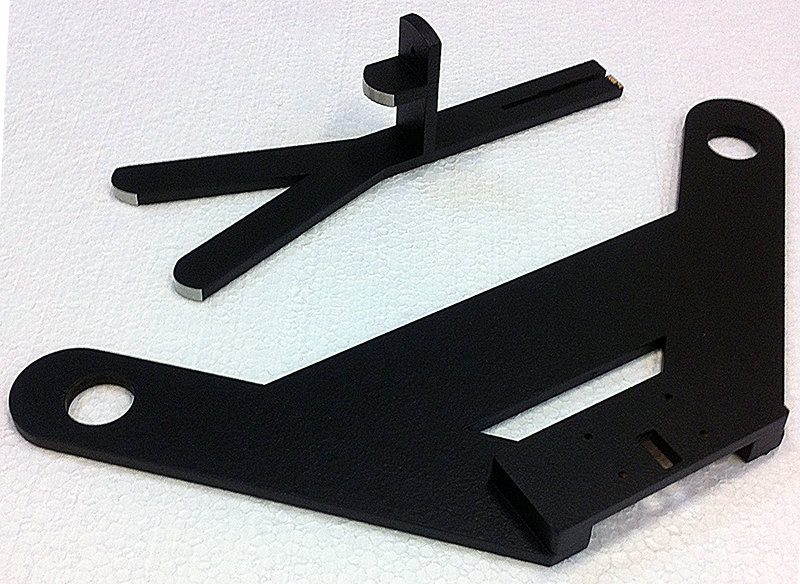

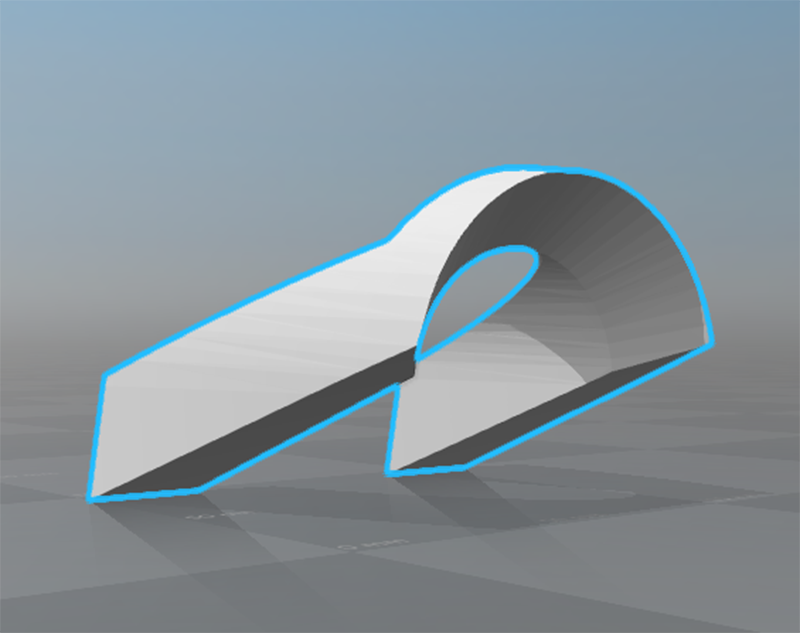

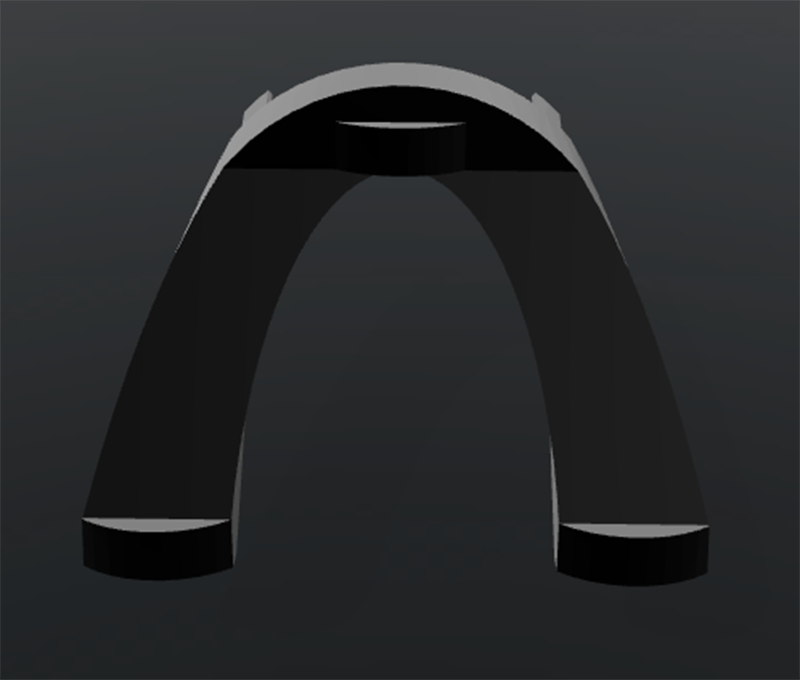

Prototyped and implemented replicable VR visualization workflow for use with existing Building Information Modeling software (Revit) and VR engines (Unity, Unreal). Prototyped, programmed, and implemented the second iteration of a VR locomotion interface used in client presentations and internal review sessions.

| Year | 2015 |

|---|---|

| Roles | Teaching Assistant, Course Developer |

| Collaborators | Halil Erhan |

| Client | Simon Fraser University |

| Tools | Solidworks, Laser Cutting |

| Supplemental Resources | IAT 337 Past Course Project Examples |

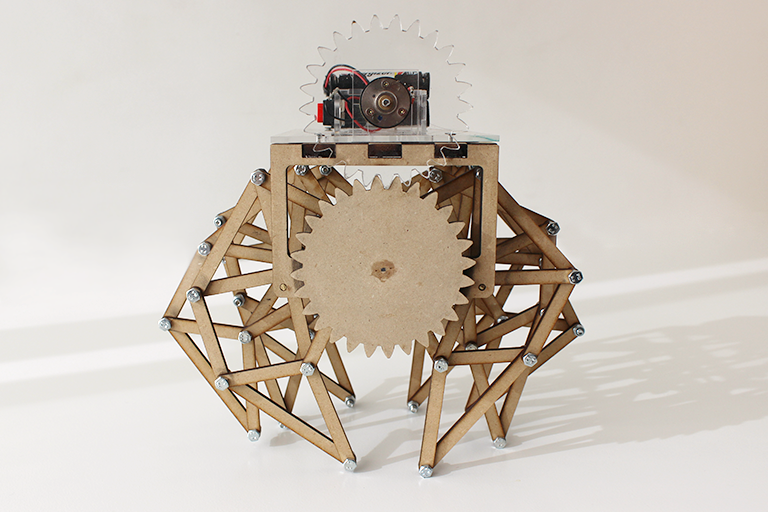

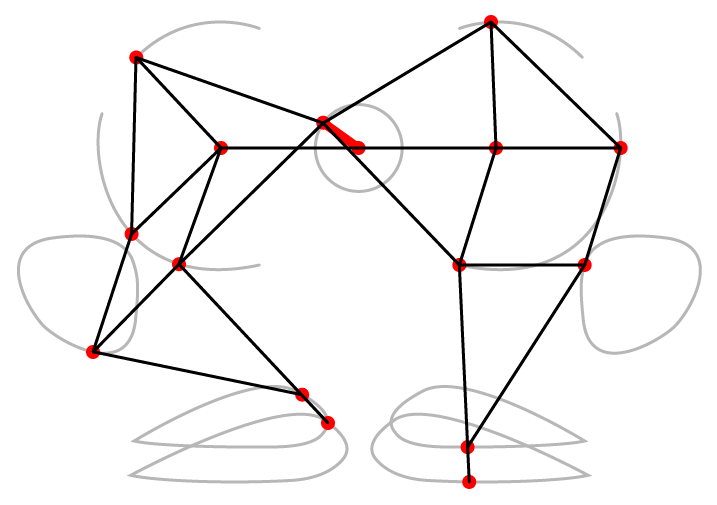

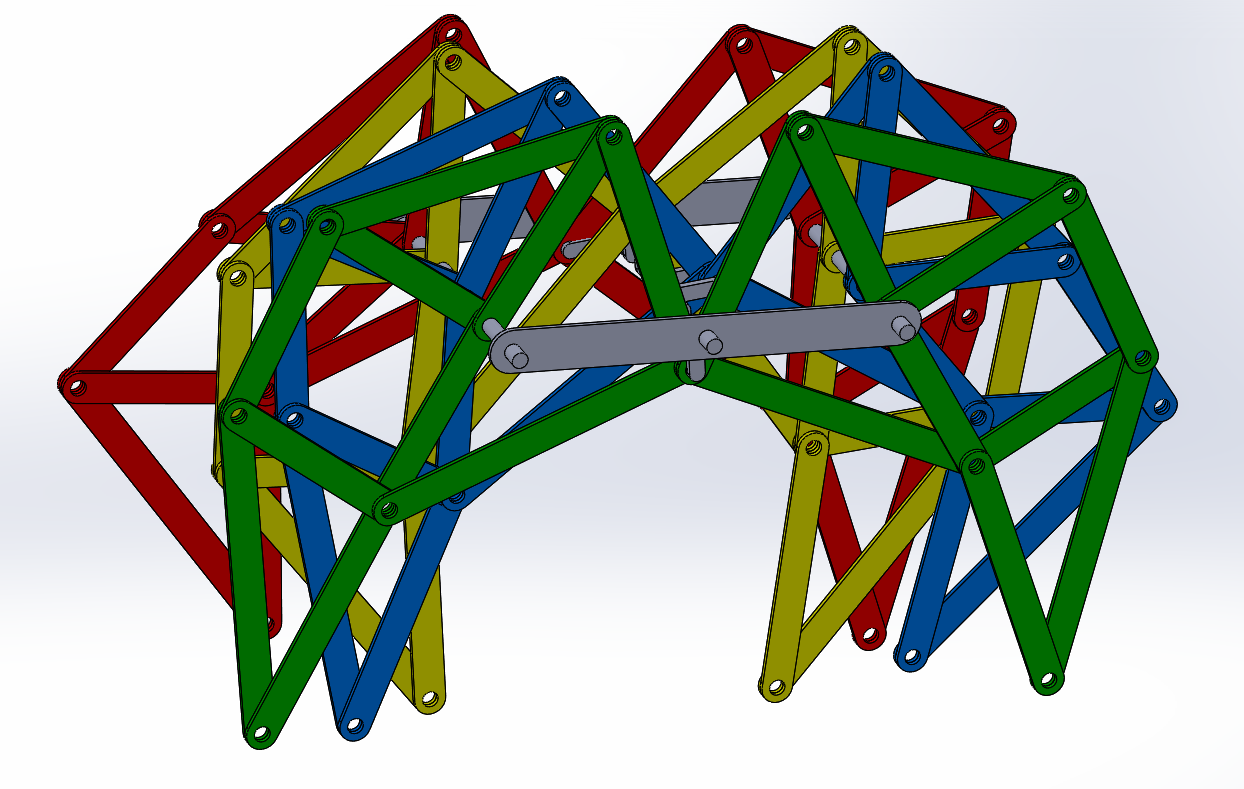

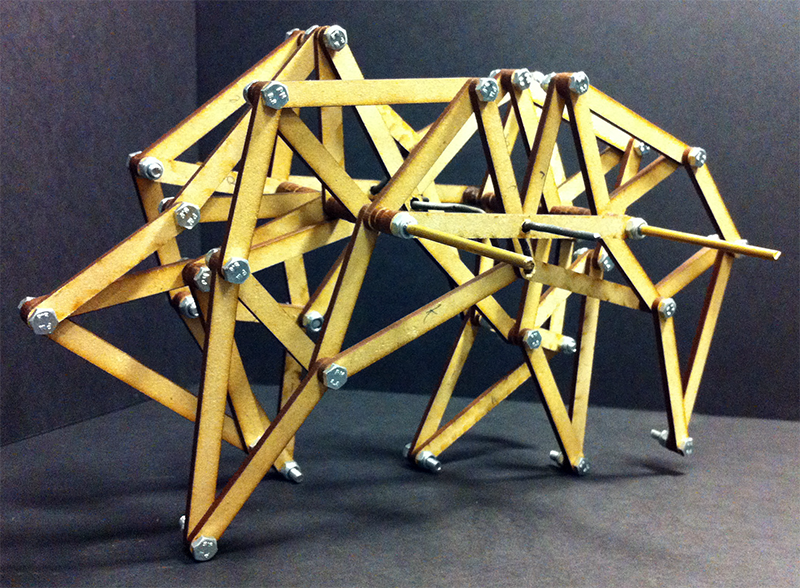

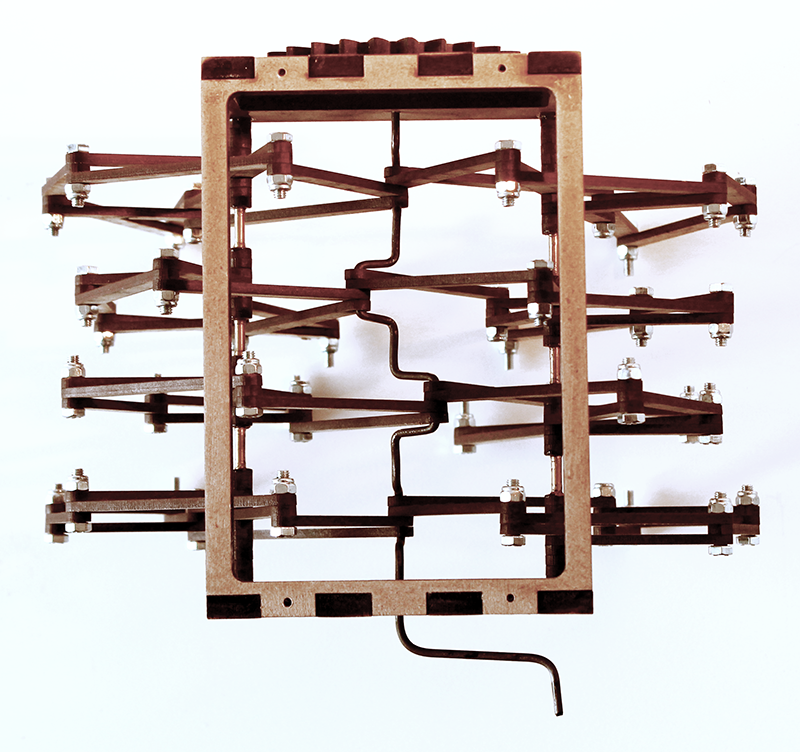

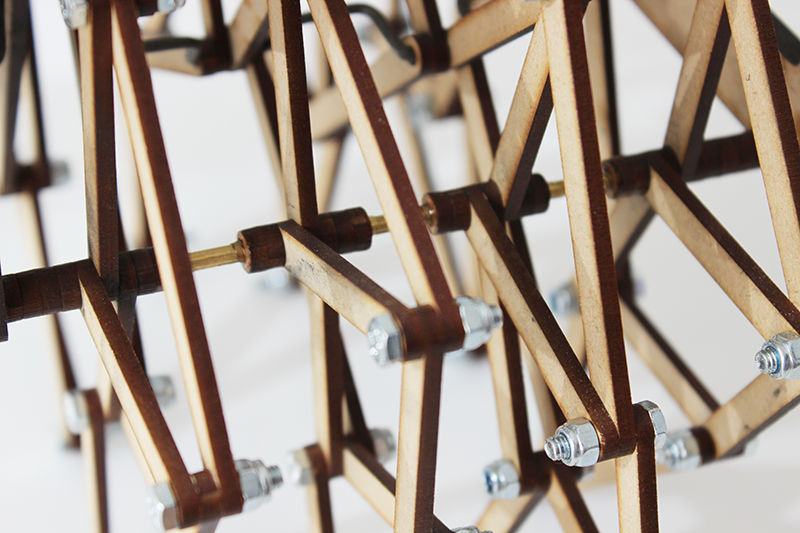

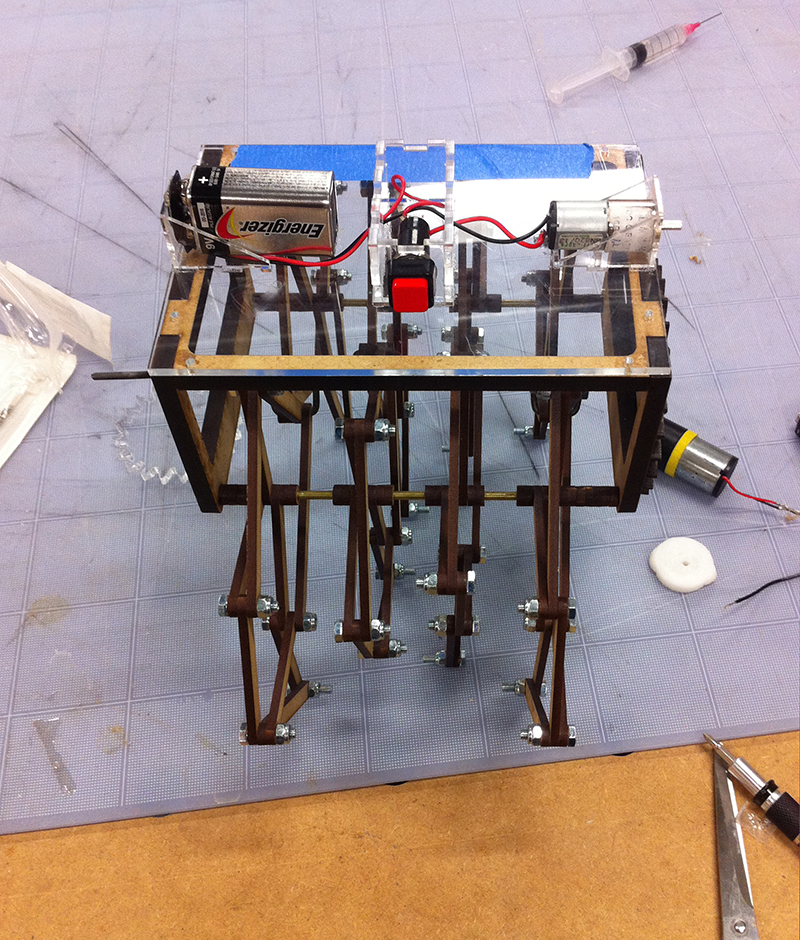

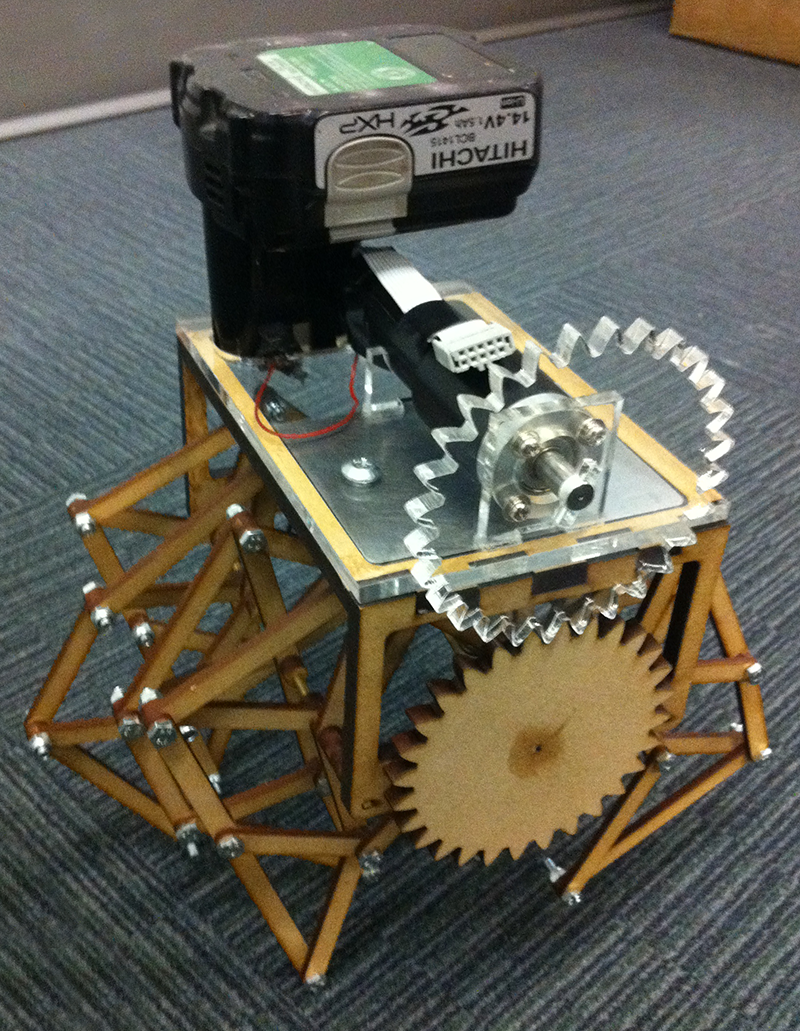

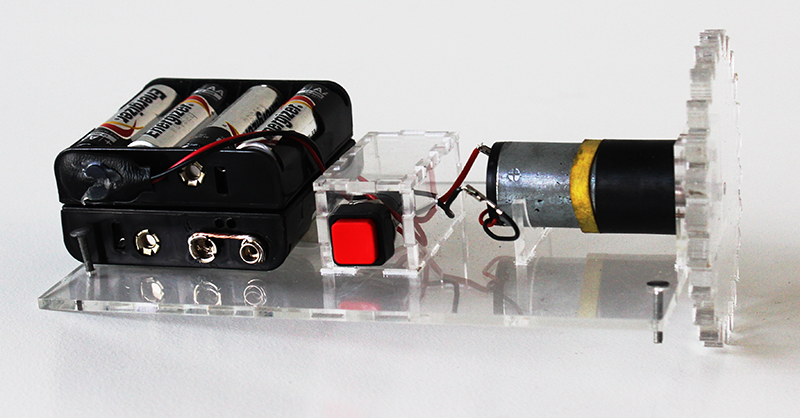

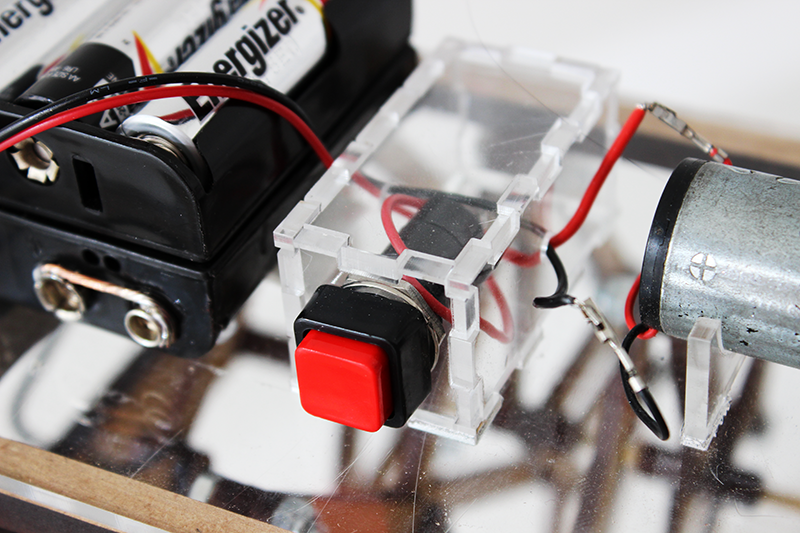

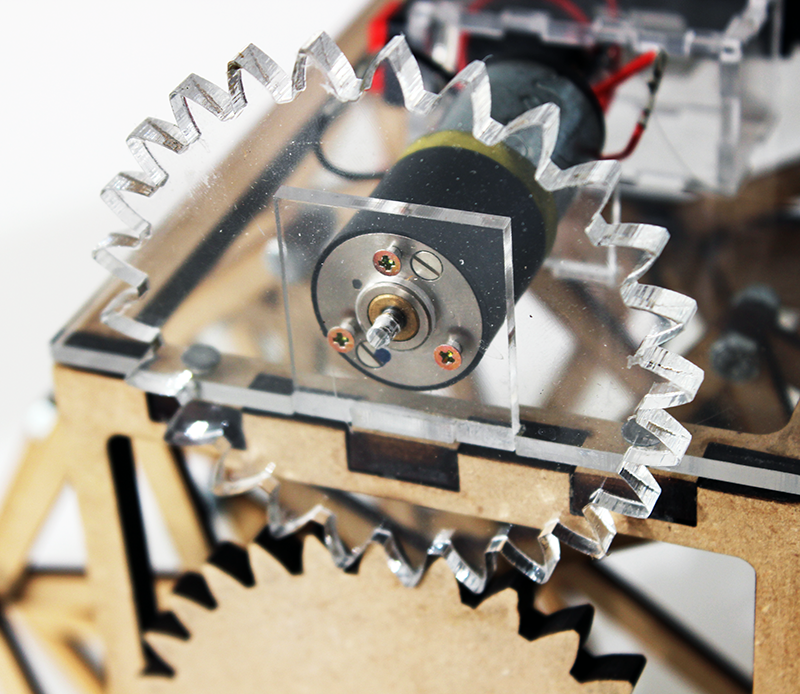

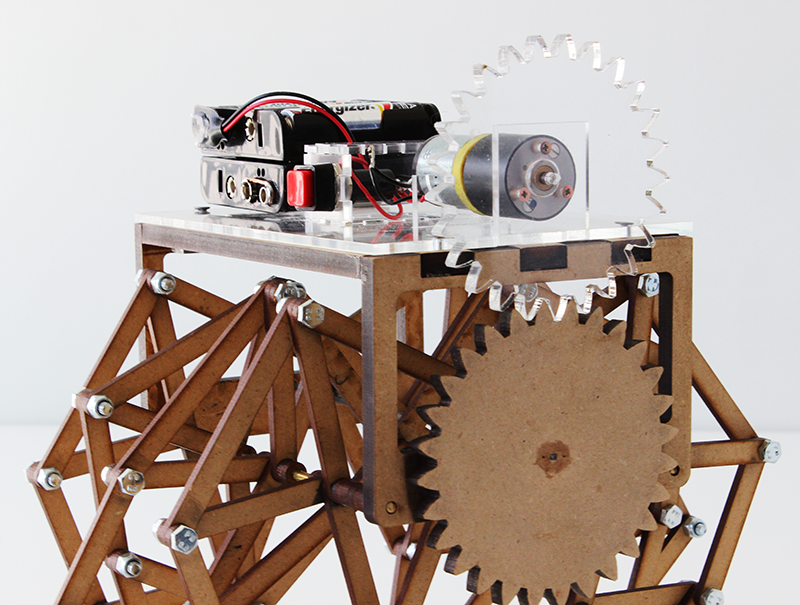

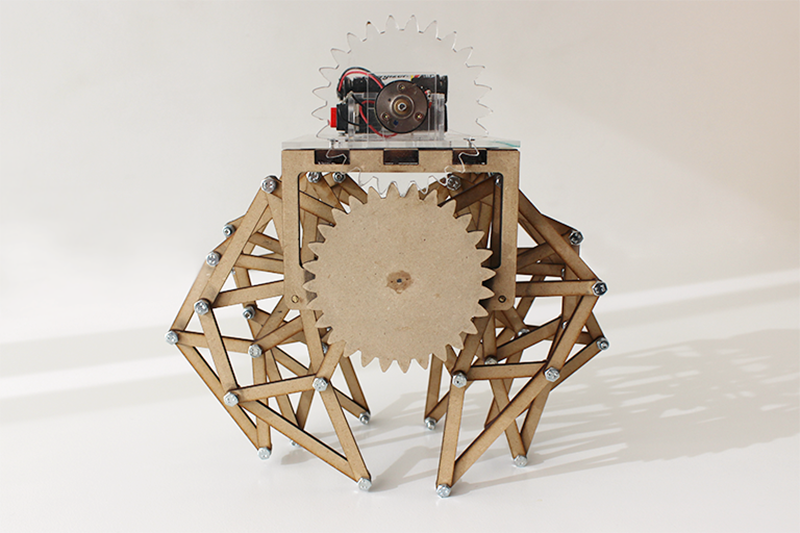

Worked with Dr. Halil Erhan on the redevelopment of course content during the transition of Representation and Fabrication (IAT 337) to a higher level course (IAT 437). This included structuring of course projects, as well as the design, prototyping, and creation of a mechanical strandbeest example that students would build as a final course project.

| Year | 2017 |

|---|---|

| Roles | UX Designer |

| Collaborators | Evan Aubrey, Masashi Sato, Joey Koblitz, and Vincent Yang |

| Client | Seattle VR Hackathon 5 |

| Tools | Unity, HoloLens |

| Supplemental Resources | Devpost Project Description |

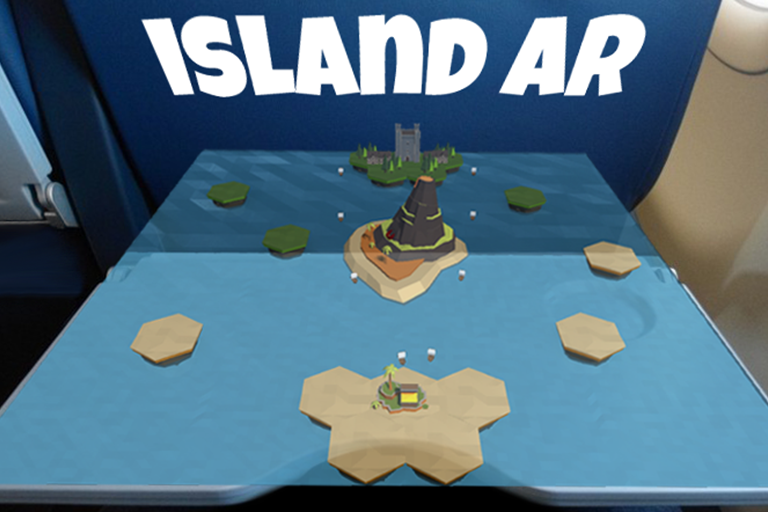

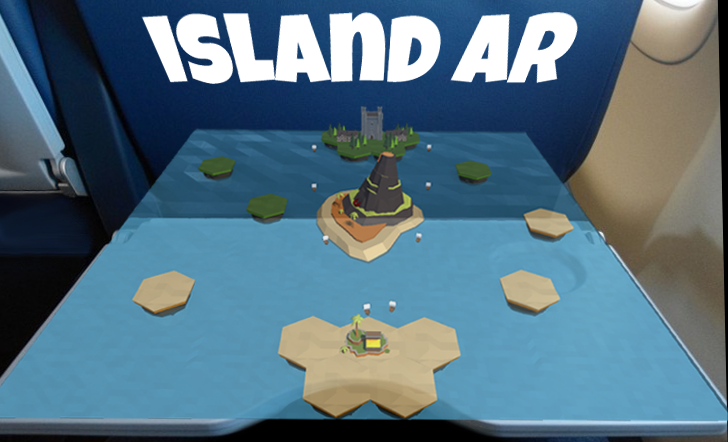

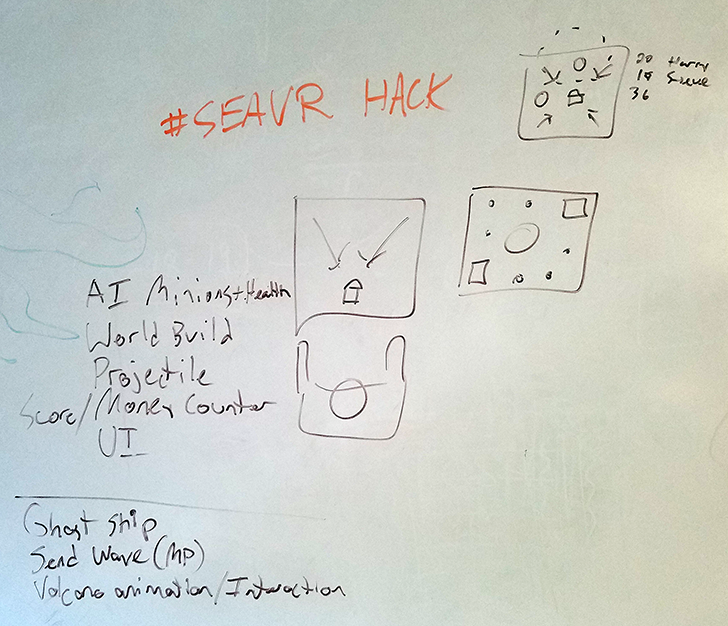

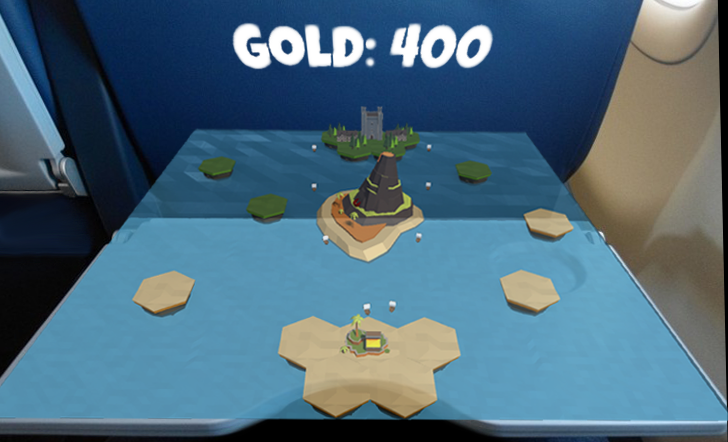

For most of us the experience of flight, while a miraculous result of modern engineering, is often an excruciatingly boring and tedious experience. We are packed into seats next to strangers and forced to distract ourselves with battles over arm rest territories, remaining helpless to the whims of unruly children desperately crying out for something to alleviate their boredom. With ever decreasing amounts of carry on space available for passengers the hope of relieving our frustrations with recreational pursuits of our own rapidly fades. What if it were possible to have a plethora of activities available to our children and ourselves that made this experience more enjoyable? What if we could not only entertain ourselves in flight, but build bonds with strangers and form a "flight community" of sorts during our brief but intense time together? We looked to use the seat back tray table in an airplane as a play space that could be utilized for a number of different in flight applications.

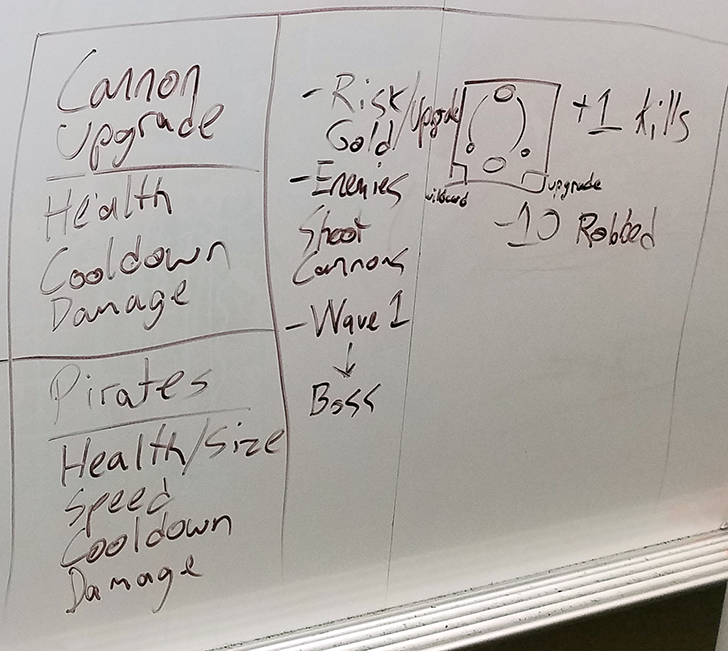

IslandAR is an augmented reality take on the classic tower defense style video game. In this case, a player defends an island, holding their recently discovered treasure, from various ships trying to steal it away from them. The player, while setting up the app in Hololens, attaches the play space to the seat back tray table. Players can set up cannon defenses against these foes around the map while also being able to use their point of view as a "free roam" cannon. Each time an enemy ship reaches the island, the player loses a bit of gold. The game ends when the player is out of gold.

We utilized the Microsoft Hololens SDK to add gesture recognition used to fire cannon balls and place defenses. Due to the limited time we had to create the project, we created the environment with assets found in the Unity Asset Store and modified them using Blender. We created a simple AI navigation system for enemies to find their way to the player's gold. We also developed a tracking method for players to place automated canons that follow the path of oncoming ships.

| Year | 2014 |

|---|---|

| Roles | Physical Prototype Designer, Fabricator |

| Collaborators | Alissa Antle, Min Fan, Emily Cramer, and Ying Deng |

| Client | Simon Fraser University |

| Tools | Arduino, Laser Cutting |

| Supplemental Resources | Dr. Alissa Antle's Project Description, |

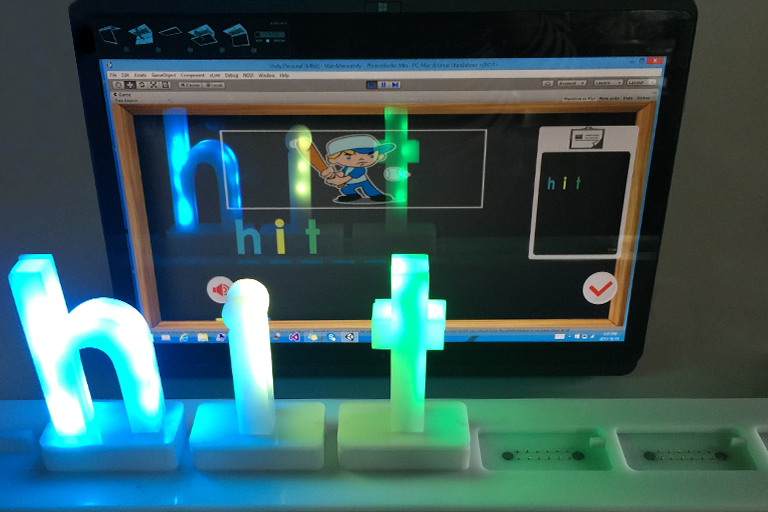

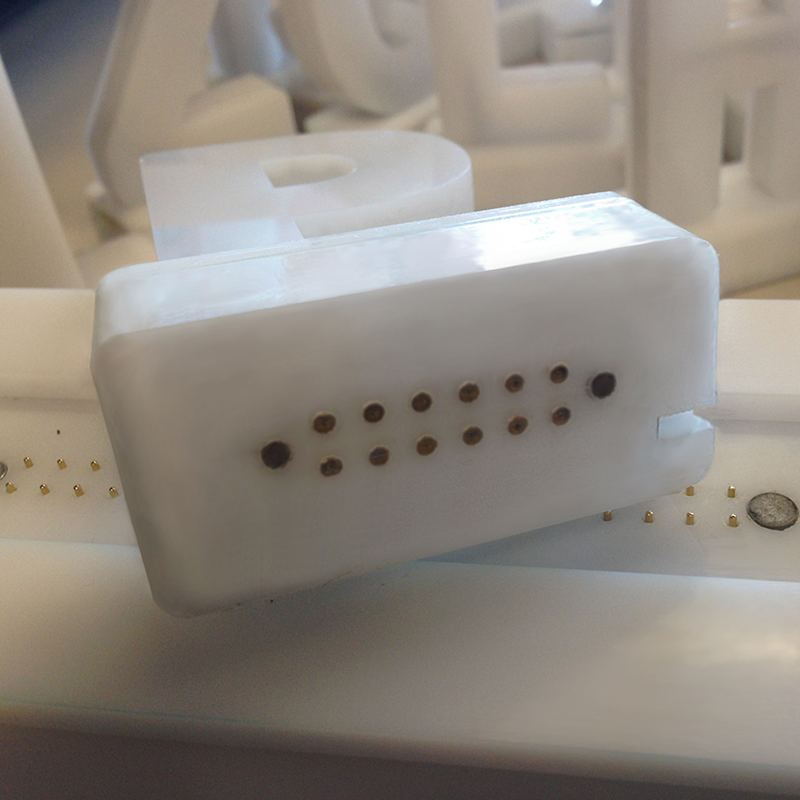

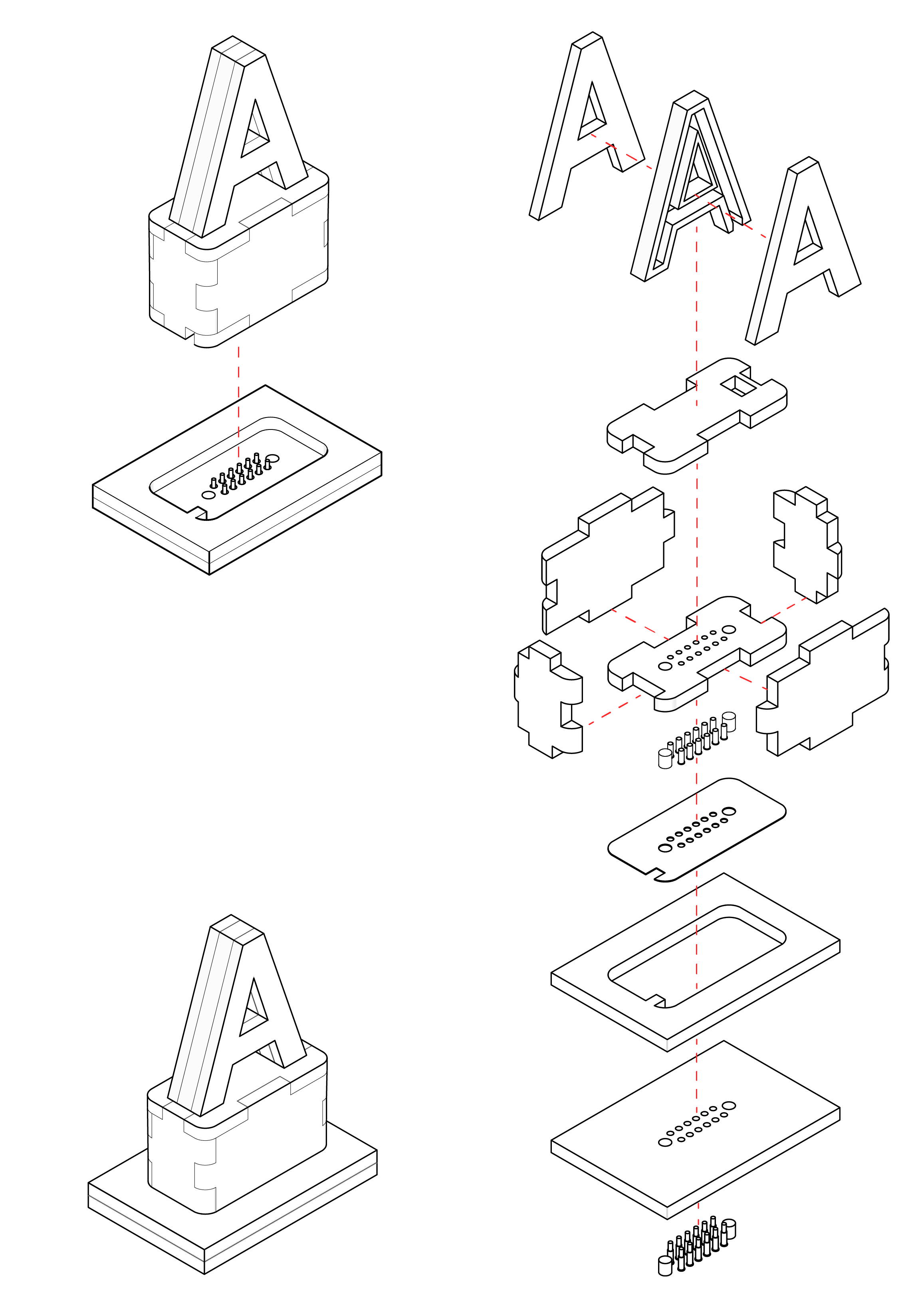

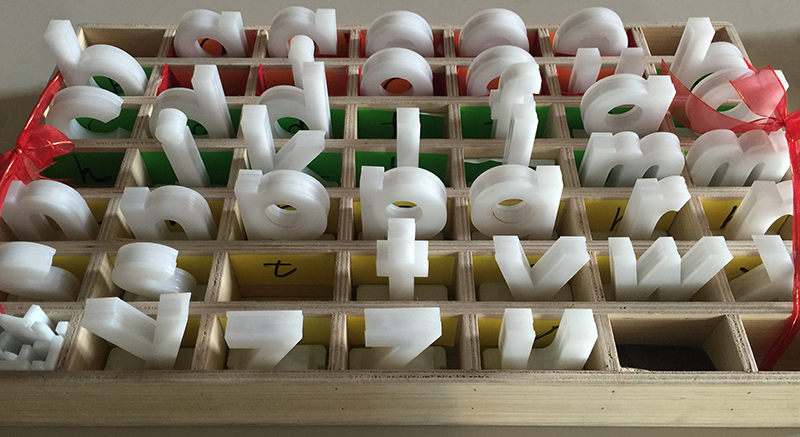

Assisted with the design and fabrication of a tangible, multi-Sensory reading learning system for children with dyslexia. The project involved the creation of translucent three dimensional physical letters with circuitry and a registration system for lighting programmable RGB LED strips within the letters to respond to a user actions.

Based on the theories of dyslexia and multi-sensory instruction, and the research on TUIs, PhonoBlocks supports the learning of phonemes and reading for 7-8 year-old children. PhonoBlocks comprises a touch-based screen near a platform with seven slots, and a set of lowercase 3D letters which were embedded with LED strips. A child interacts by placing one or more tangible letters onto the platform. The system detects the 3D tangible letters and their spatial arrangements through a set of pogo pins embedded at the bottom of the letters; it then displays the appropriate dynamic colour cues embedded in the letters. Audiovisual feedback is also provided on the screen which also displays coloured 2D letters, associated letter sounds, and pictures.

PhonoBlocks contains seven rule-based learning activities and each has a unique colour-coding schema and associated activity procedure. PhonoBlocks also allows two modes of use. In the tutor’s mode the tutor can direct the learning activity while in the student’s mode the student can practice what they learnt on their own through a set of word-building games and receive correct feedback.

| Year | 2013 |

|---|---|

| Roles | Project Owner/Lead |

| Collaborators | Minhaj Samsudeen and Perry Tan |

| Tools | Unity, C# |

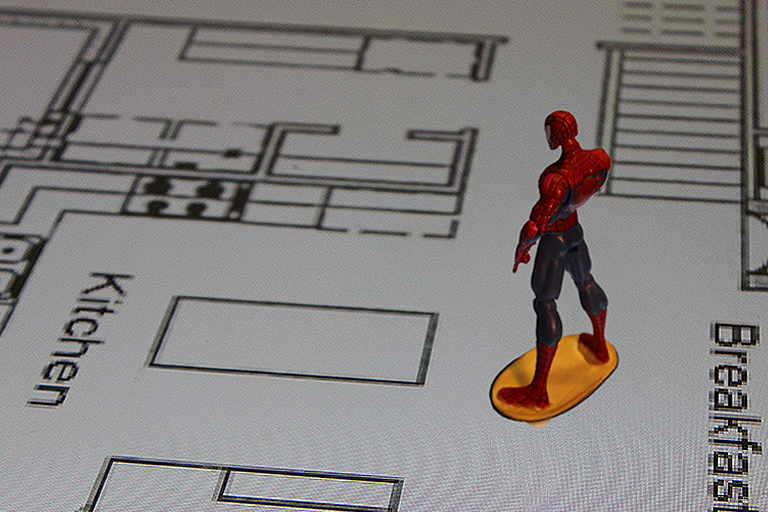

Imagine having a simple and straightforward way of knowing where you are in a building plan, while still being able to immerse yourself quickly and easily within the 3D environment. Using a Microsoft Surface Tabletop interface and the Oculus Rift DK2, we built a prototype capable of controlling the orientation and position of the user in a virtual environment via manipulation of a tangible avatar positioned on the display.

| Year | 2012 |

|---|---|

| Roles | Project Initiator and Lead, Designer, Fabricator |

| Collaborators | Amila Dias and Jamie Horwitz |

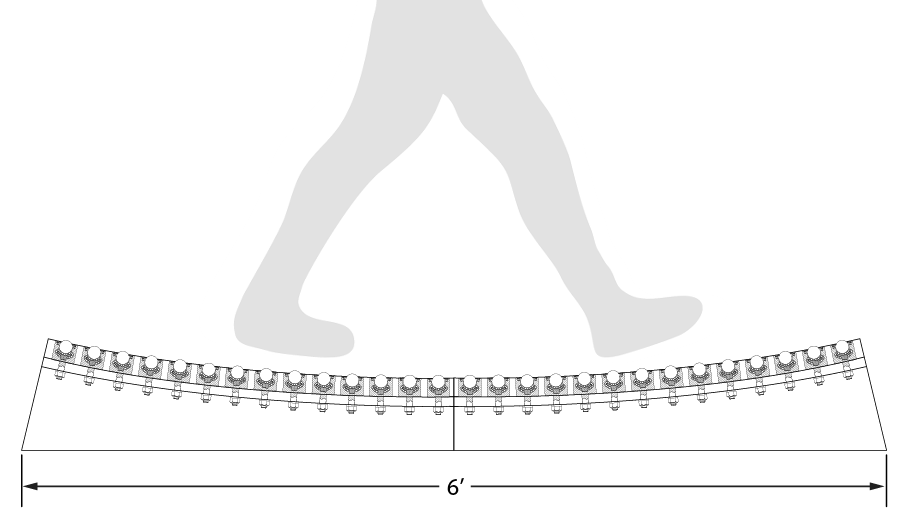

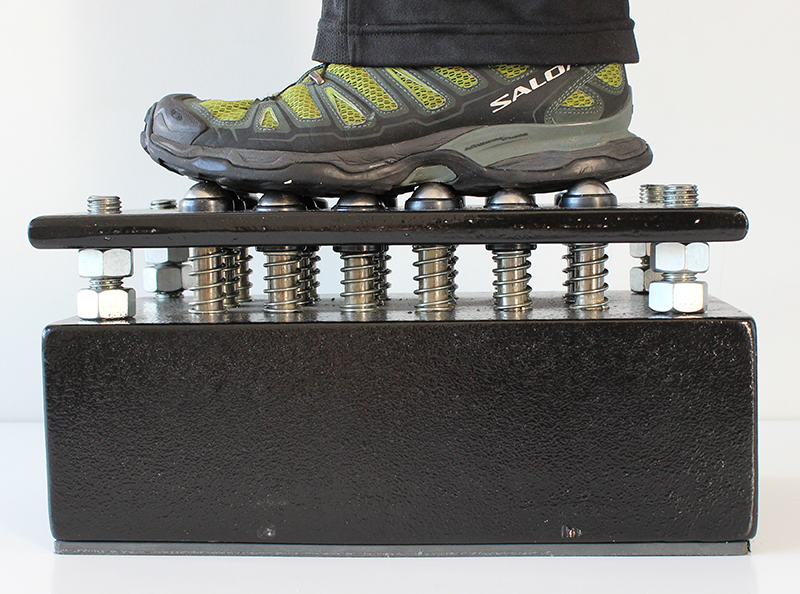

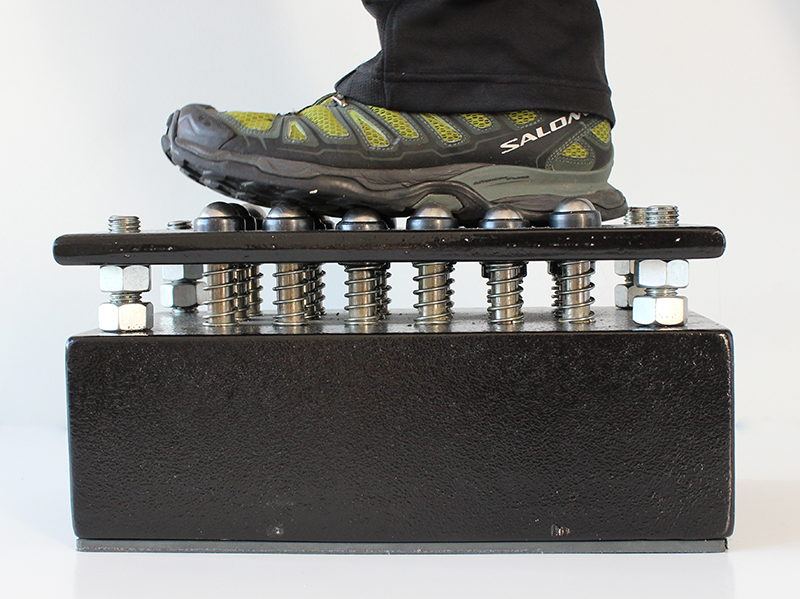

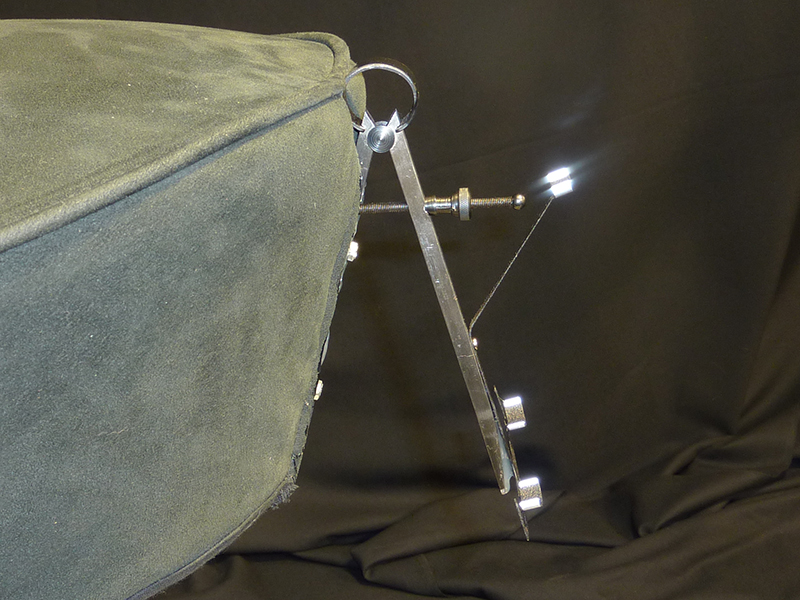

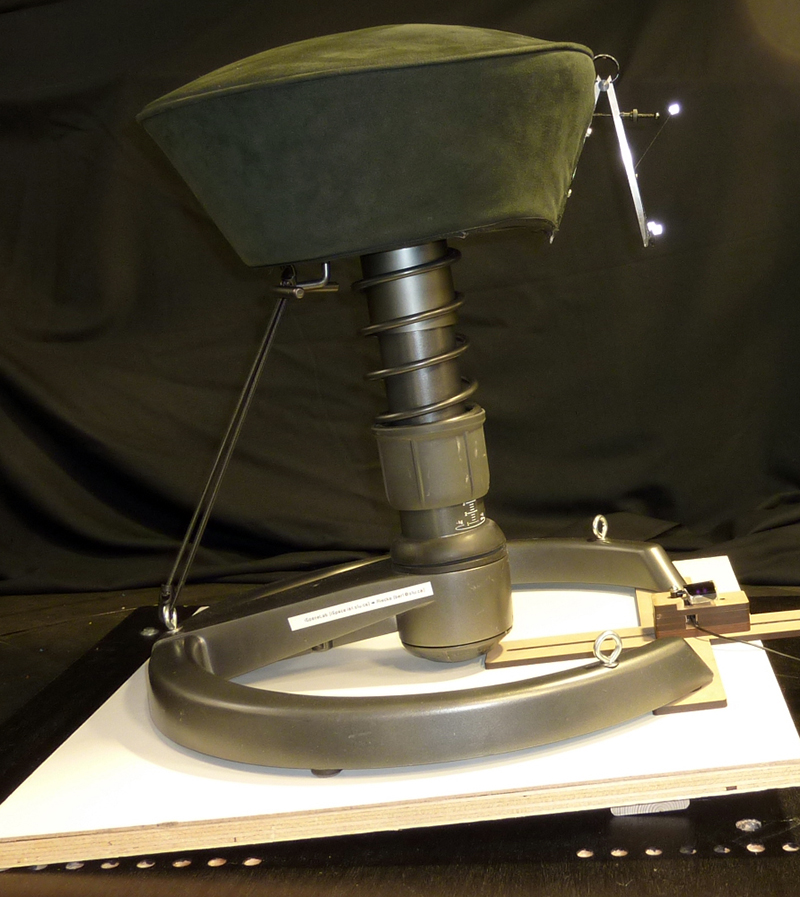

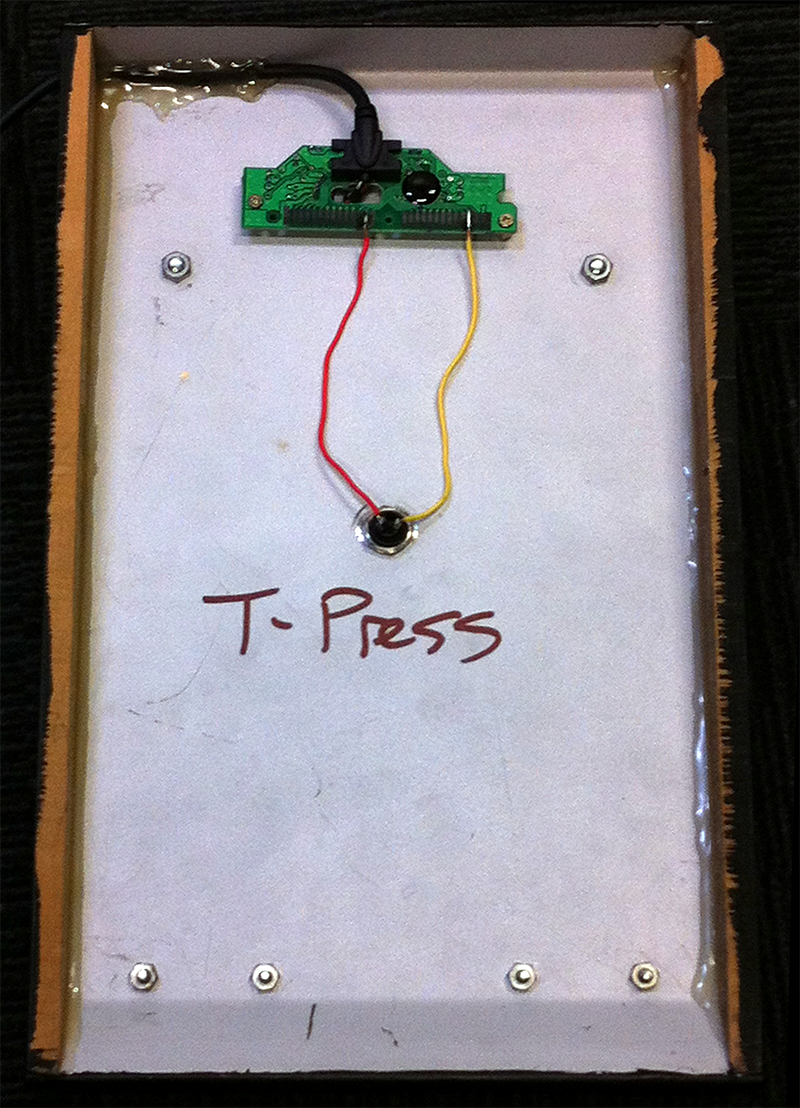

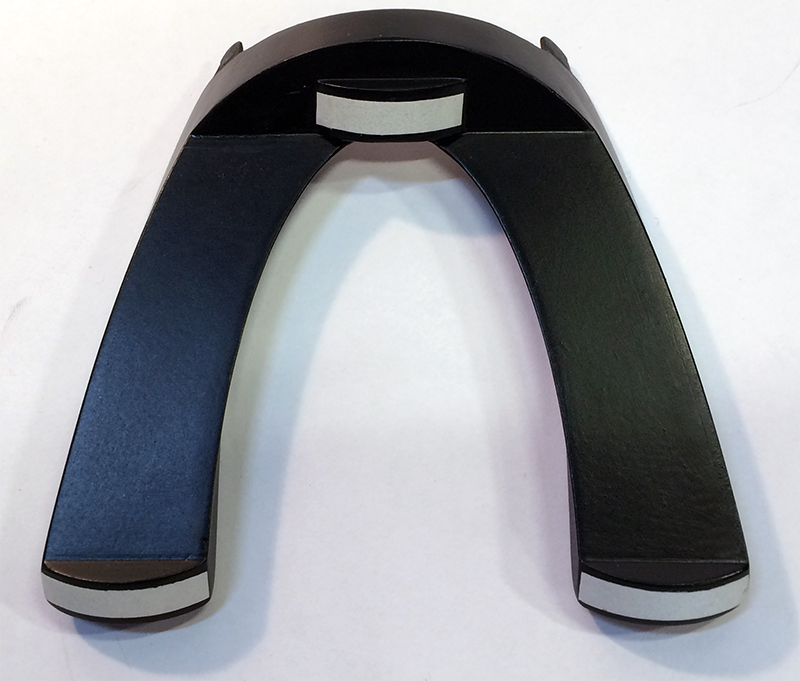

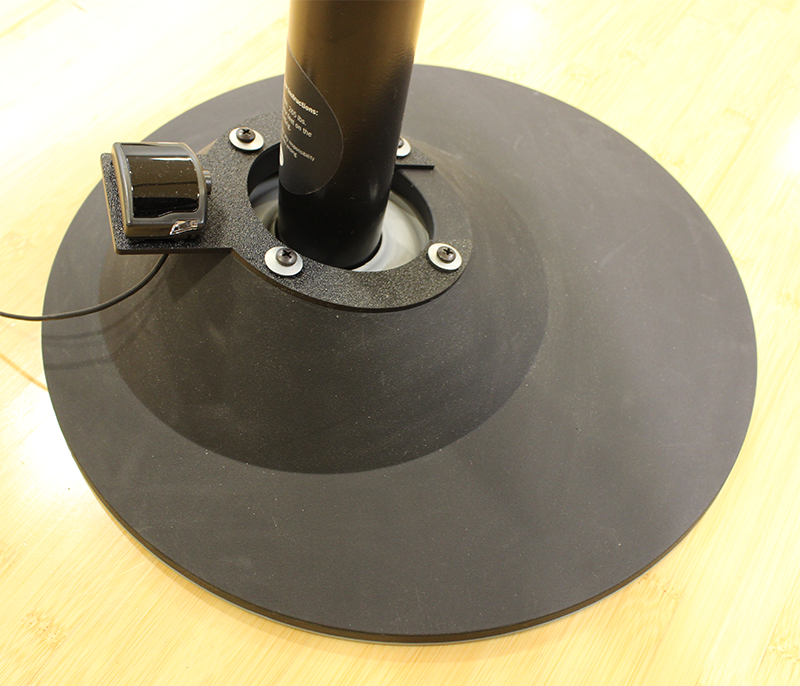

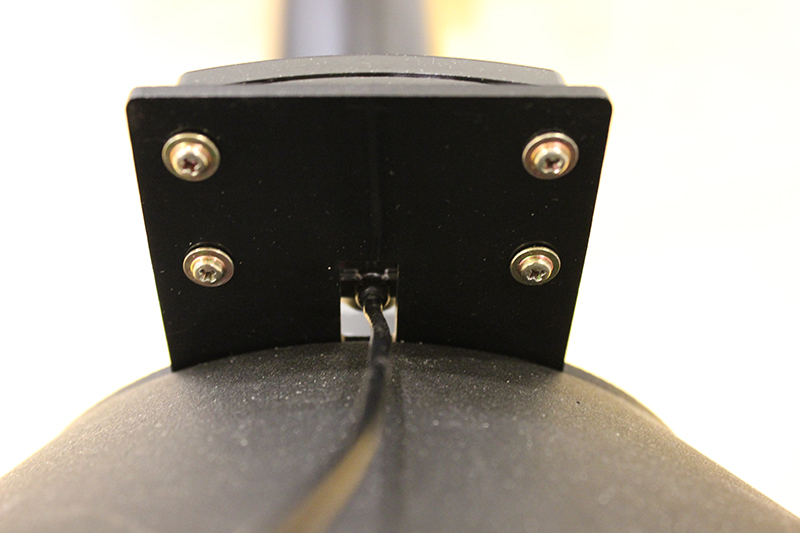

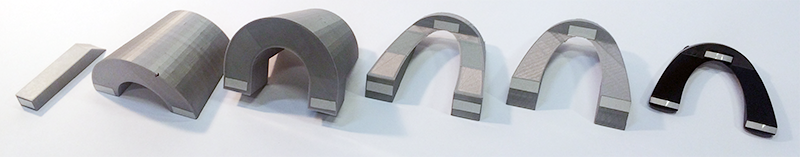

Spatial limitations for walking in VR are one of the primary challenges in the field today. Consequently we explored the idea of an omnidirectional treadmill for VR with ball transfer units arrays. Basic ball transfer arrays proved to be dangerous and slippery, so iterated through ideas not requiring belts/harnesses, yet still safe. We designed and implemented a sleeve and spring mechanism to overcome, and created a functional physical prototype.